Found this thread after install and working on migration over the last couple of months… so using the latest version.

There seems to be two status locations:

- The docker container status

- and the watchdog status

Here is my guess.

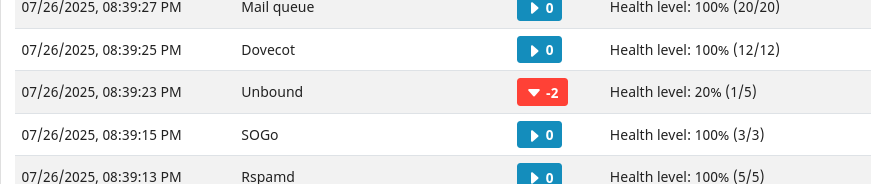

My container status always showed healthy but the watchdog appeared to be getting DNS lookup failures during the loop… So the watchdog service set the status for unbound as unhealty (⅗) or worse - often (0/5)

The watchdog service would eventually trigger a restart on the unbound service since it assumed it was failing.

So jumping through the containers and trying to run the commands manually I discovered in fact that the watchdog container could not make calls to unbound.

played all the netstat, tcpdump games…

All requests inside the docker network were being refused.

Which led me to the unbound.conf…

server:

verbosity: 1

interface: 0.0.0.0

interface: ::0

logfile: /dev/console

do-ip4: yes

do-ip6: yes

do-udp: yes

do-tcp: yes

do-daemonize: no

#access-control: 0.0.0.0/0 allow

access-control: 10.0.0.0/8 allow

access-control: 172.16.0.0/12 allow

#add my docker network

access-control: 172.32.0.0/12 allow

# end add

access-control: 192.168.0.0/16 allow

access-control: fc00::/7 allow

access-control: fe80::/10 allow

#access-control: ::0/0 allow

I added my network (see comment line above)

Et Voila!

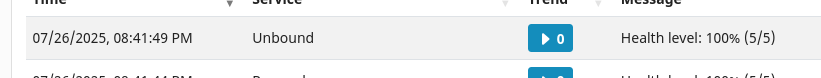

No more errors! - Watchdog report (5/5) healthy for unbound now.

No idea if this is the issue for others… but now my dockerized mailcow appears to be running clean ;-)

BEFORE

AFTER

I can’t really explain how watchdog ever got a success - even the (⅕) makes no sense to me 🤷